Clustering

Introduction

All of our previous analysis of tweets relating to school shootings have been limited to tweets containing hashtags. We operated under the assumption that a hashtag could act as a proxy for a label by grouping hashtags commonly associated with news tweets and grouping hashtags commonly associated with tweets expressing opinions. Clearly this approach has several shortcomings--most notably the lack of practical significance.

In this analysis, we will perform clustering to identify groups in the tweets that hopefully transcend the less-interesting binary classification of tweets as being news-oriented or opinion-oriented. We expect that our clustering analysis will split tweets by their emotional sentiment (perhaps empathetic/critical) or by their political orientation (tighter gun laws / looser gun laws).

Theory

Clustering is a field of unsupervised machine learning that groups observations in data based on how closely they are located to each other. As a brief introduction, we discuss a few different clustering techniques below:

K-Means

Not to be confused with K Nearest Neighbors, K-Means is a clustering algorithm that accepts a parameter $K$ determining the number of clusters to identify in the data. For each cluster, a centroid is determined which serves as the arbitrary center of the cluster. The centroid can be calculated using mean, median, or other metrics to determine the center of the observations.

The algorithm begins by selecting K random arbitrary centroids in the space. From there, it iteratively updates to represent the optimal center for K clusters in the dataset based on some distance metric (usually Euclidean distance). Once the iterations no longer update the centroids, the centroids are considered to have converged.

K-Means clustering is ideal for clustering data that follow a Gaussian distribution. Gaussian distributions appear as a circle or oval in 2-dimensional space and as spherical or ovaline clouds in 3-dimensional space. Dimensions greater than three can be difficult to visualize, so often the best approach for determining the optimal clustering algorithm is to try multiple ones.

Density-Based Spatial Clustering of Applications with Noise (DBSCAN)

DBSCAN is an alternative clustering algorithm that is based on grouping observations by their density. In this case, density refers to the concentration of observations in a relatively small area of the space. In DBSCAN, outliers are omitted from clusters because they are, by definition, isolated from high-density clusters of data.

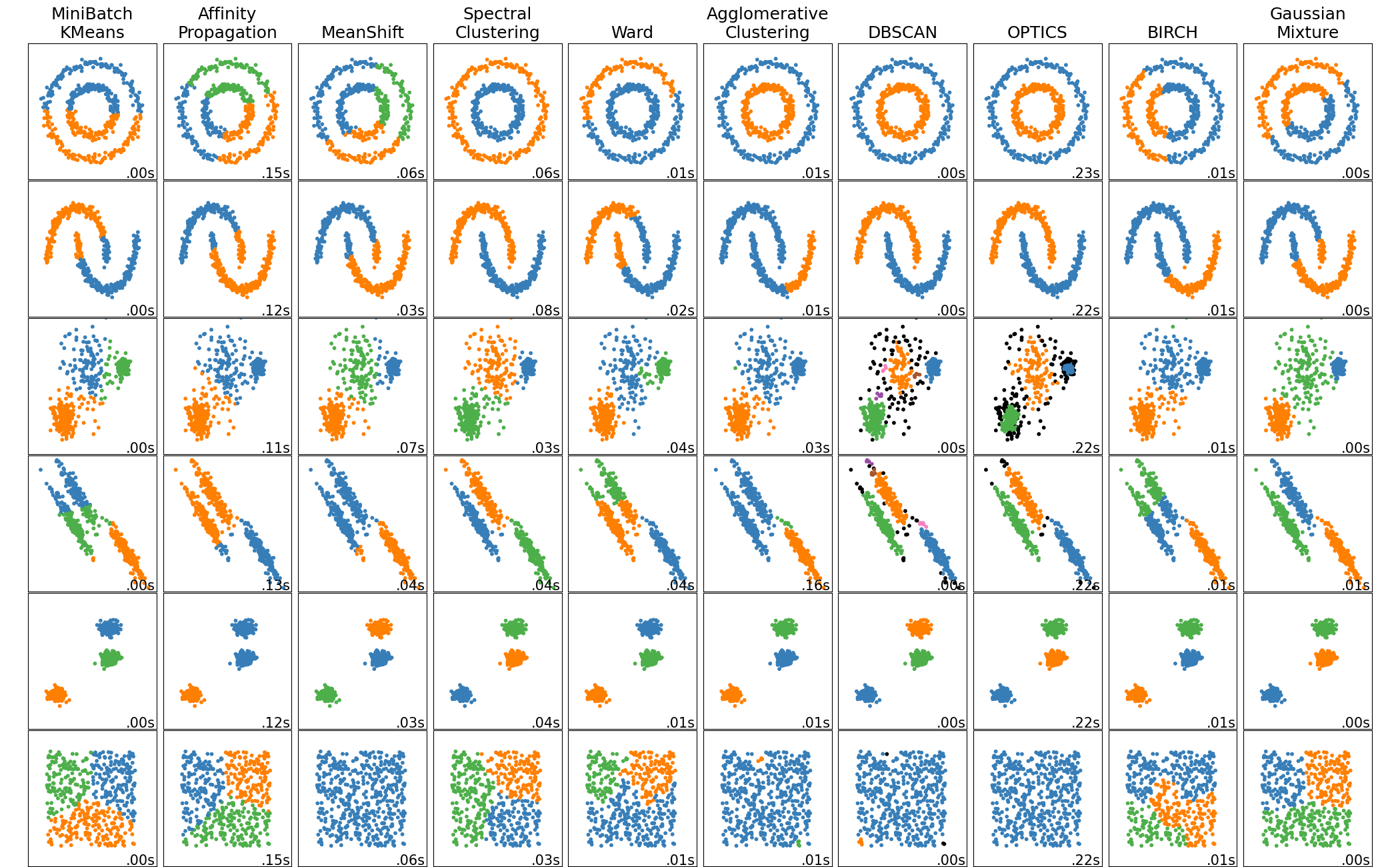

The fundamental difference between DBSCAN and K-Means is that DBSCAN is very good at identifying clusters of data that are not linear / do not follow a Gaussian distribution. Consider the graphic below, published by Scikit Learn, illustrating the results of various clustering algorithms on toy datasets of different shapes.

As we can see, DBSCAN is much better at clustering irregular shapes than K-Means.

Hierarchical Clustering

Hierarchical clustering, unlike the clustering algorithms defined above (which are considered partitional clustering algorithms), is an approach to clustering that assigns data to different "sub-clusters" at different levels of the hierarchy. For example, at the highest levels, the data are separated into few clusters, but the clusters are large. At the lower levels of the hierarchy, the clusters get smaller but are more plentiful.

Oftentimes, the size and number of clusters at each hierarchy can effectively be illustrated in a dendrogram. This approach allows the user to determine the optimal number/size of clusters "at a glance"--depending on the use case of the analysis.

Methods

Data Selection

Because we do not consider any labels in clustering, we are free to use the entire set of tweets in this analysis. Using this Python script, we attempt to create a bag of words model for a random selection of 50,000 tweets (rather than only tweets with hashtags). However, due to computing limitations we will settle for the same BOW model that we have used in our previous analyses.

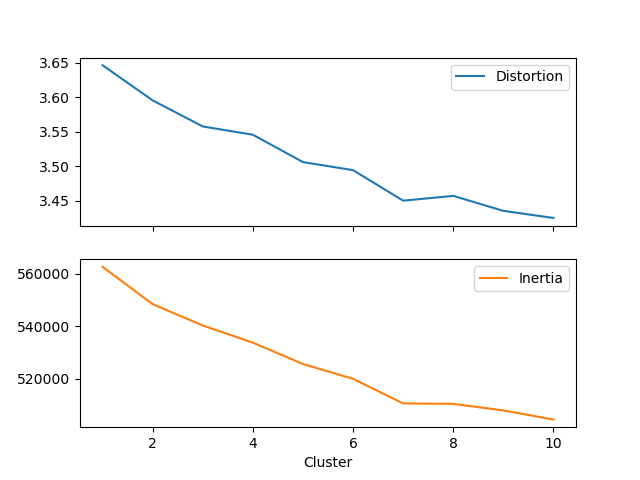

Hyper-parameter tuning

Using K-Means, we arrive at the following graphs for determining the optimal K number of clusters for our Twitter data. Using the elbow method, we determine that an optimal number of clusters, based on the distortions and inertia of the model, is K=7.

| Cluster | Distortion | Inertia |

|---|---|---|

| 1 | 3.646 | 562,647 |

| 2 | 3.595 | 548,418 |

| 3 | 3.558 | 540,262 |

| 4 | 3.546 | 533,720 |

| 5 | 3.506 | 525,581 |

| 6 | 3.495 | 519,913 |

| 7 | 3.450 | 510,538 |

| 8 | 3.457 | 510,362 |

| 9 | 3.436 | 507,861 |

| 10 | 3.425 | 504,381 |

Final results

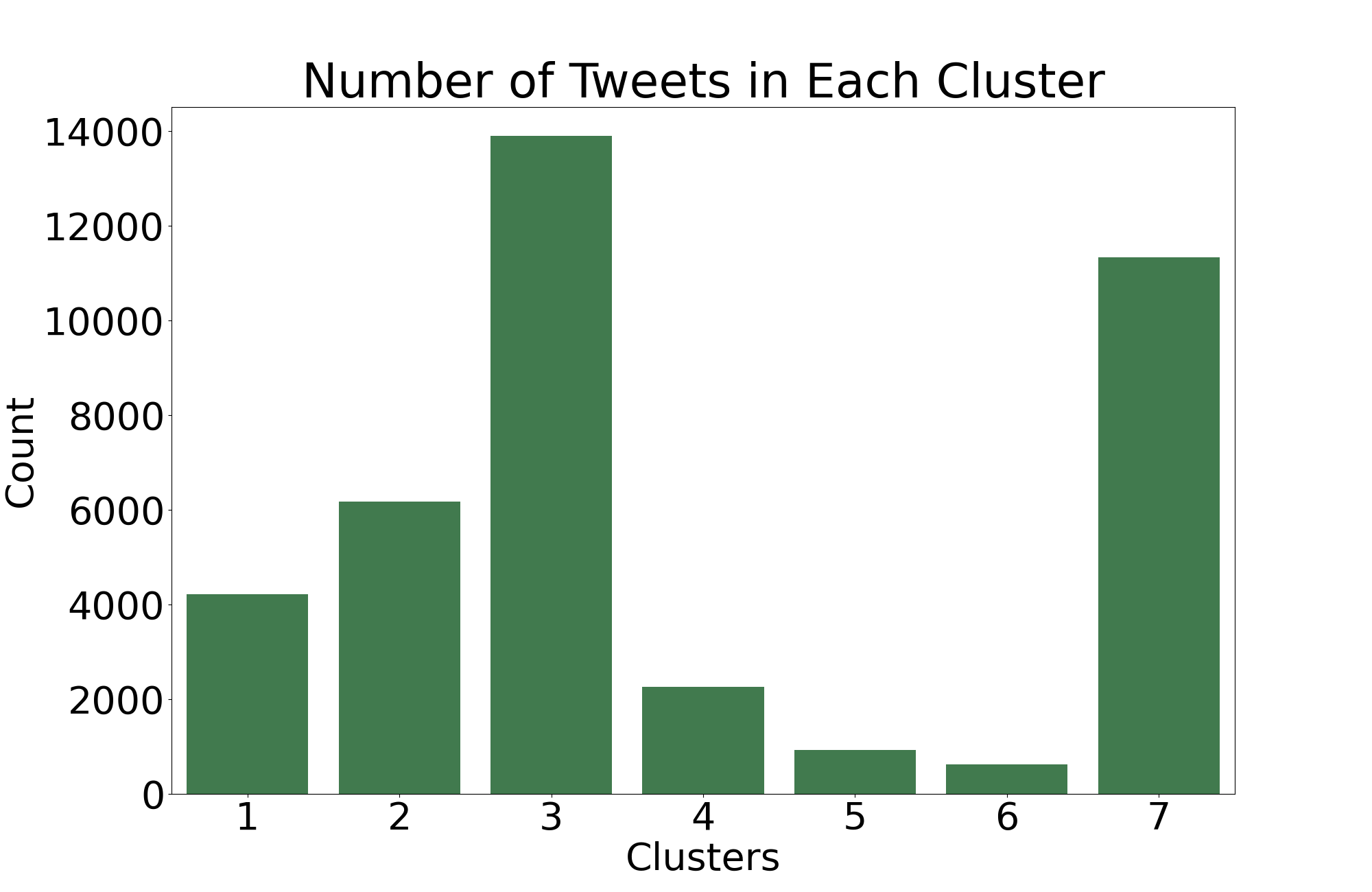

We now perform a final clustering using our optimal K and save our results. Using this optimal model, we can see below the number of tweets that the algorithm assigns to each cluster. By itself, this isn't terribly insightful, but it may provide more insight once we identify the possible patterns behind the clusters.

Results

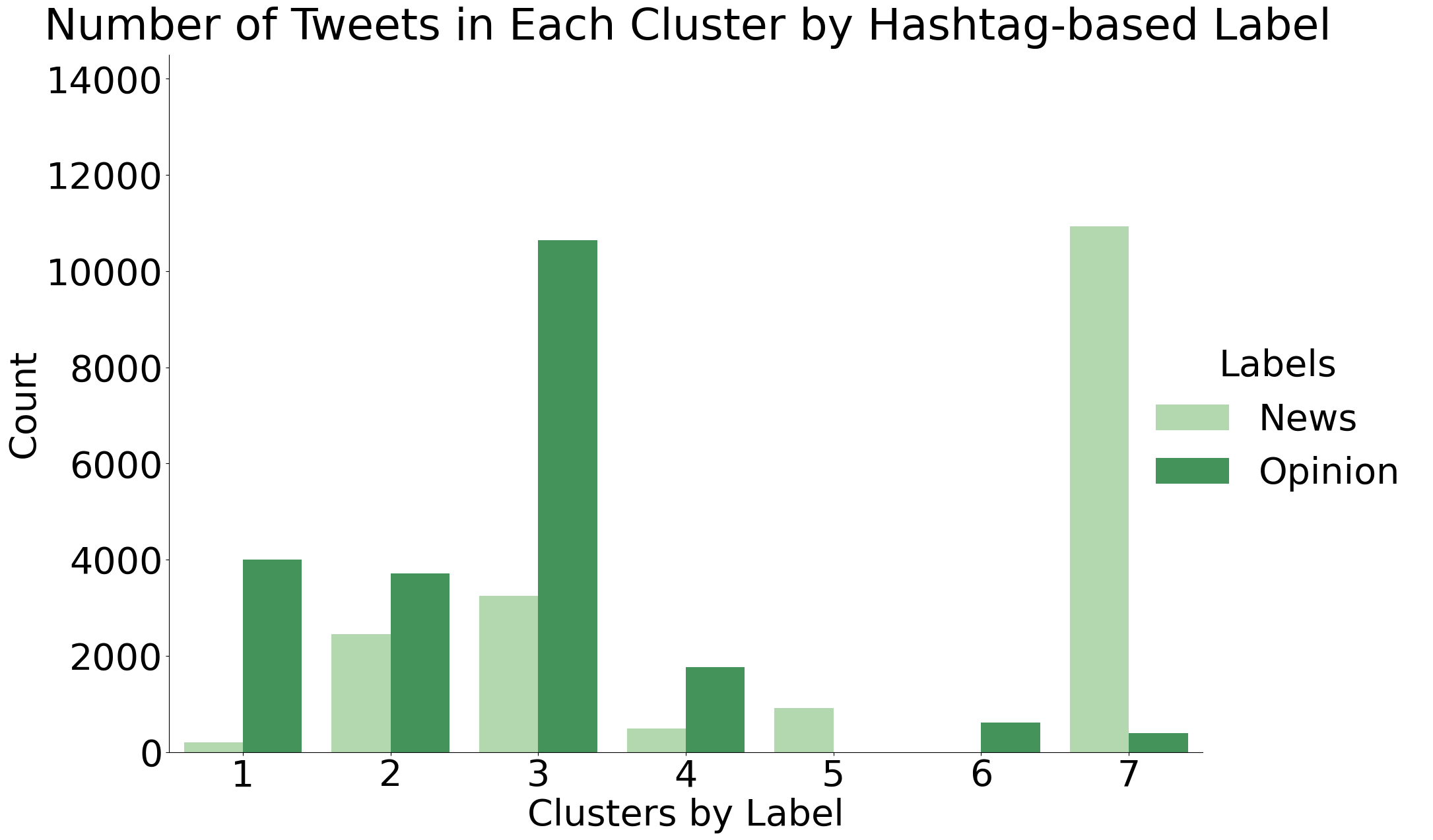

Perhaps one of the most insightful observatios we can make about the clusters is how they compare to the hashtag-based labels that we used for the Naïve Bayes algorithm. Do the clusters act as subcategories within the scope of news vs opinion tweets? Or is there more overlap?

As we can see, there is indeed very heavy overlap between the clusters assigned by K-Means and the hashtag-based News/Opinion labels. Cluster 3 seems to be very strongly correlated with the opinion-based tweets while Cluster 7 seems to be correlated with news-based tweets.

At a later date, we will return to this analysis and examine the clusters at a much closer level to identify any patterns distinguishing the clusters aside from the news vs. opinion distinction.

Conclusions

Unlike most of the previous analytical approaches of this project (Naïve Bayes, Decision Trees, and SVM) in which we try to make predictions about the data, this section attempts to learn more about the data without any associated predictions. In truth, this project is best suited for this sort of non-predictive analysis. It allows us to gain insights about the data, which is the main goal of this project.

This analysis further validated the results of the Naïve Bayes algorithm by confirming the assumptions behind labeling tweets as being opinion-based or news-based depending on their hashtags. A possible next step for this analysis would be to cluster all 2.5 million tweets and track the levels of how many tweets occur in each cluster over time.

References

- Clustering algorithm performance by dataset shape chart is taken from Scikit Learn's website.